Google is making a fast specialized TPU chip for edge devices and a suite of services to support it – TechCrunch

In a pretty substantial move into trying to own the entire AI stack, Google today announced that it will be rolling out a version of its Tensor Processing Unit — a custom chip optimized for its machine learning framework TensorFlow — optimized for inference in edge devices.

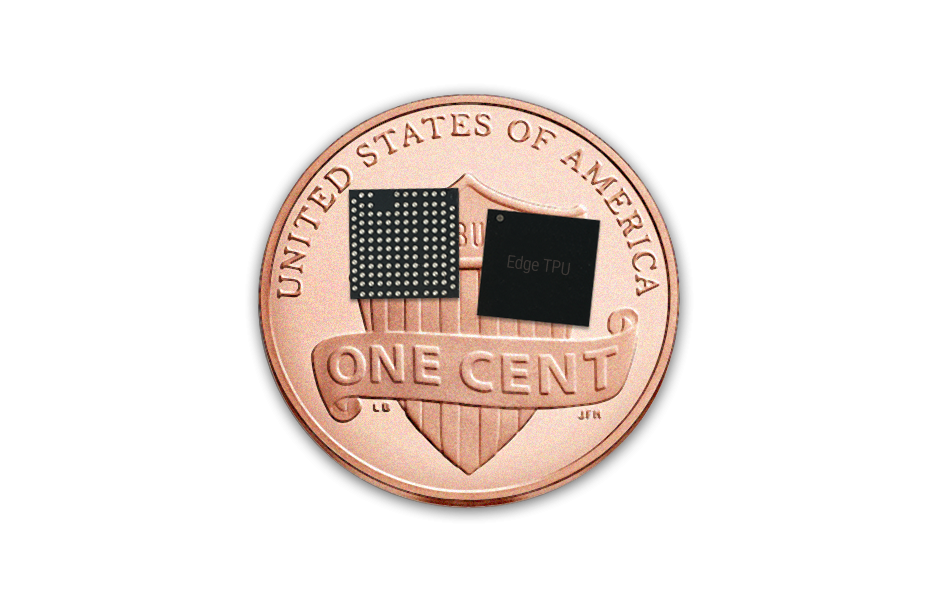

That’s a bit of a word salad to unpack, but here’s the end result: Google is looking to have a complete suite of customized hardware for developers looking to build products around machine learning, such as image or speech recognition, that it owns from the device all the way through to the server. Google will have the cloud TPU (the third version of which will soon roll out) to handle training models for various machine learning-driven tasks, and then run the inference from that model on a specialized chip that runs a lighter version of TensorFlow that doesn’t consume as much power. Google is exploiting an opportunity to split the process of inference and machine training into two different sets of hardware and dramatically reduce the footprint required in a device that’s actually capturing the data. That would result in faster processing, less power consumption, and potentially more importantly, a dramatically smaller surface area for the actual chip.

Google is also rolling out a new set of services to compile TensorFlow (Google’s machine learning development framework) into a lighter-weight version that can run on edge devices without having to call the server for those operations. That, again, reduces the latency and could have any number of results, from safety (in autonomous vehicles) to just a better user experience (voice recognition). As competition heats up in the chip space, both from the larger companies and from the emerging class of startups, nailing these use cases is going to be really important for larger companies. That’s especially true for Google as well, which also wants to own the actual development framework in a world where there are multiple options like Caffe2 and PyTorch.

Google will be releasing the chip on a kind of modular board not so dissimilar to the Raspberry Pi, which will get it into the hands of developers that can tinker and build unique use cases. But more importantly, it’ll help entice developers who are already working with TensorFlow as their primary machine learning framework with the idea of a chip that’ll run those models even faster and more efficiently. That could open the door to new use cases and ideas, and should it be successful, will lock those developers further into Google’s cloud ecosystem on both the hardware (the TPU) and framework (TensorFlow) level. While Amazon owns most of the stack for cloud computing (with Azure being the other largest player), it looks like Google is looking to own the whole AI stack – and not just offer on-demand GPUs as a stopgap to keep developers operating within that ecosystem.

Thanks to the proliferation of GPUs, machine learning has become increasingly common across a variety of use cases, which doesn’t just require the horsepower to train a model to identify what a cat looks like. It also needs the ability to take in an image and quickly identify that said four-legged animal is a cat based on the model it’s trained with tens of thousands (or more) images of what a cat is. GPUs were great for both use cases, but it’s clear that better hardware is necessary with the emergence of use cases like autonomous driving, photo recognition on cameras, or a variety of others — for which even millisecond-level lag is too much and power consumption, or surface area, is a dramatic limiting factor.

The edge-specialized TPU is an ASIC chip, a breed of chip architecture that’s increasingly popular for specific use cases like mining for cryptocurrency (such as larger companies like Bitmain). The chips excel at doing specific things really well, and it’s opened up an opportunity to tap various niches, such as mining cryptocurrency, with specific chips that are optimized for those calculations. These kinds of edge-focused chips tend to do a lot of low-precision calculations very fast, making the whole process of juggling runs between memory and the actual core significantly less complicated and consuming less power as a result.

While Google’s entry into this arena has long been a whisper in the Valley, this is a stake in the ground for the company that it wants to own everything from the hardware all the way up to the end user experience, passing through the development layer and others on the way there. It might not necessarily alter the calculus of the ecosystem, as even though it’s on a development board to create a playground for developers, Google still has to make an effort to get the hardware designed into other pieces of hardware and not just its own if it wants to rule the ecosystem. That’s easier said than done, even for a juggernaut like Google, but it is a big salvo from the company that could have rather significant ramifications down the line as every big company races to create its own custom hardware stack that’s specialized for its own needs.

Cool read from TC Source Link

Comments

Post a Comment